In the video below, René Graf, Vice-Rector Education HES-SO, explains how artificial intelligence is integrated into the HES-SO's strategic orientations for 2025-2028, the institution's positioning with regard to AI in education, the parties involved in AI at HES-SO, and how institutions can adapt their approach to effectively integrate new technologies. Find out also how HES-SO is establishing a network of players to move forward in the field of AI.

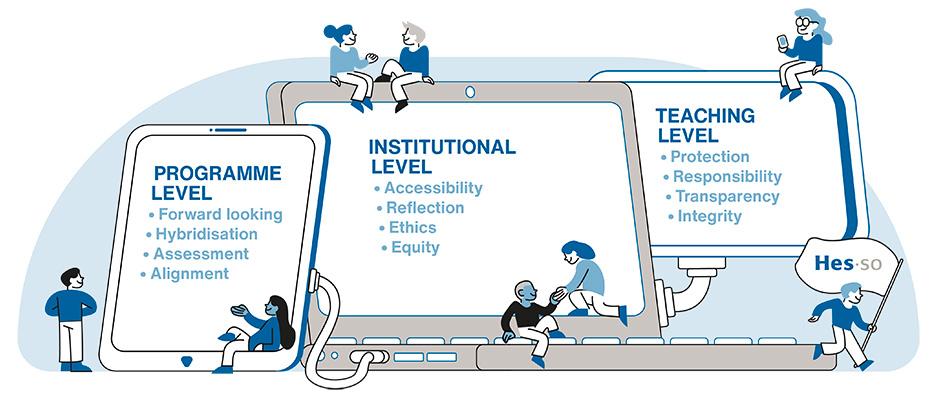

Three levels and twelve guiding principles for AI at HES-SO institutions

HES-SO’s integration of AI is based on the key values of ethics, accessibility, fairness, and transparency. Through an approach characterised by hybridisation, reflection and pedagogical alignment, the institution ensures that the use of AI is adapted to the needs of students and teachers, while ensuring data protection and data integrity. This forward-looking approach ensures a continuous match between AI tools and training objectives, reinforcing responsibility and innovation in teaching and learning.

HES-SO students and staff must be made aware of the biases, risks and ethics involved in the use of AI at a civilisational level. They need to be knowledgeable about its potential impacts on community, democracy, the environment, geopolitics, social inequalities, discrimination, digital sovereignty, cybercrime, and so on.

Students are invited to think about their use of AI in terms of these ethical issues, including the rules of professional ethics, responsibility and integrity that apply to their academic activities. If their university requires them to sign an academic integrity charter at the start of their studies, these AI-related issues will be included in it.

Examples of resources:

The HES-SO will ensure access to tools, information, training and reflection on the use of AI in education, for teaching staff, for administrative and technical staff and for students.

It will ensure the compatibility of tools with the institution's standards (notably in terms of security and data protection) and the coherence of the digital ecosystem.

Concrete examples:

- Training courses on AI and chatbots at DevPro, the HES-SO Professional Development centre

- Adoption of the Copilot tool integrated into the HES-SO Microsoft 365 license

Beyond accessibility, measures will be taken to reduce barriers (material, financial, organisational) linked to the use of AI technologies and to ensure that students have access to opportunities independently of their individual circumstances.

If equal access among students to tools of equivalent performance cannot be maintained, pedagogical and logistical alternatives will be developed to reduce disparities.

Practical examples:

- Examination held in a computer room equipped with identical machines

- Safe exam browser and proctoring hardware

HES-SO maintains a watch on AI, its manifestations, issues and risks, at all possible levels and for all the professional fields (staff and programmes) represented in the institution with a view to fostering ongoing reflection and questioning on the issues, risks and benefits of using this technology.

In a context of technological acceleration, this attitude of reflection must be backed by open, agile approaches to exploration and experimentation. These approaches are intended to make the most of the capacity for innovation brought about by artificial intelligence, without losing sight of the risks and challenges involved in deploying it in its various forms, present and future.

Practical examples:

- Web page on AI in education at HES-SO

- Calls for projects by the CCN

In partnership with professional circles, each programme conducts a forward-looking analysis on how AI is expected to change the professions to which it leads students. Programmes will review and update their skills catalogue accordingly and will draw the necessary conclusions about changes to their curricula.

At the same time, programmes will seek to identify the nature of the AI tools specific to these future professions, as well as the list of issues they raise. They will include in their curricula courses or activities to raise awareness of these tools and issues.

Examples of programmes:

- Information Systems

- Medical Radiology Technology (MRT)

- AI in design training: the example of HEAD

Students are encouraged to explore AI tools as partners in their own learning. However, programmes will be careful to hybridise these approaches with traditional activities, and to ensure that human relations always take precedence over the use of AI in teaching activities, while maintaining a high degree of pedagogical coherence.

As well as teaching the use of AI (principles and tools), programmes will offer courses about AI at the meta-level (issues and risks). This ensures that students master AI-related skills, distinctive skills (Munn, 2023) and AI-independent skills, including those that enable them to deploy critical expertise with regard to the products of generative AI.

Examples of resources:

- Bloom’s Taxonomy Revisited (Munn, 2023)

- HES-SO Digital Competence Reference Framework

Considering that the use of AI in teaching impacts (and "misaligns") learning objectives, teaching methods and assessment systems, each programme must ensure that the principle of "pedagogical alignment" (or "internal coherence") is applied at all levels of its teaching curriculum.

To make their curricula "AI-resilient", programmes will reinforce the competency-based approach by considering AI as a resource in the service of competencies. Competency must remain the only way to assess the authenticity of students' professional performance, not their ability to drive an AI tool to generate answers to assessments.

Examples of resources:

- The competency-based approach - Web page and checklists

The scope and modalities of AI use in teaching and assessment activities will be defined according to the targeted learning outcomes, with or without the use of AI. Assessments may involve the use of AI tools relevant to the professional field of the programme, but will be designed to clearly distinguish students’ disciplinary and professional skills from their ability to use AI.

Programmes will encourage teaching staff to make assessment criteria more flexible, and to adapt them to the role that AI may have played in students’ production of evidence of learning. In some cases, ongoing monitoring of students’ work helps to ensure that AI is used appropriately as they progress, and to fine-tune assessment criteria.

Examples of resources:

- A guide to the transparent use of generative AI in education, GPIA

- An Ethical Framework for Exams and Continuous Assessment with AI. By Thomas Steiner (2023)

Module descriptions will mention as explicitly as possible the use of AI within learning and teaching systems, the objectives of this use and how it impacts on the organisation of teaching and assessment. The ways in which AI might be used in an assessment must be described to students and discussed with them at the start of the course. In some cases, these procedures will be defined in collaboration with students.

The extent to which students can use AI tools in an assessment will be determined by the instructor according to the skills to be developed, the type of learning involved and the taxonomic level to be assessed (Munn, 2023). The guides and instructions for Bachelor’s/Master’s courses, in particular, will specify how AI can be used by students, and suggest ways in which it can be used judiciously.

Examples of resources:

- A guide to the transparent use of generative AI in education, GPIA

For teaching or assessment activities in which the use of AI is authorized, the rules governing the way in which students must mention their use of authorized tools shall be specified at the start of the course, and ideally in the module description.

Penalties may be applied in the event of non-compliance with citation rules or improper use of AI tools during assessments. Students will be informed of any such sanctions in advance.

Examples of resources:

- A guide to the transparent use of generative AI in education, GPIA

When using AI in a teaching or assessment context, students and teachers will be made aware of the need to comply with standards for the protection of personal and sensitive data and, where applicable, intellectual and industrial property rules. As a result, everyone must refrain from uploading personal data to the remote server of a digital company for which the HES-SO has not received a security guarantee (patient medical data, student notes, address files, etc.).

For their own protection, students and teachers must be made aware of the risks inherent in the use of AI tools, whose answers can be wrong even as they sound authoritative, and whose application can then lead to more or less dangerous consequences depending on the context in which they are used.

Examples of resources:

- Tiulkanov flowchart (Sabzalieva & Valentini, 2023)

Students will be made aware of the individual and academic liability they incur when using AI. Any output generated in this way remains the responsibility of the user, who must check both its veracity and its compliance with ethical and intellectual property rules (Aebi-Müller et al. 2021).

Awareness of dependency on generative AIs must also be discussed with students, to ensure that users of generative AIs retain control over their relationship with this technology.

Examples of resources:

- A guide to the transparent use of generative AI in education, GPIA